PWNED.

I set up the application to overlay all of its information over the real game screen, then started recording. Then I stumbled across this:

[Click to Play Video]

I think there’s something philosophical and deep to be had here.  I’m working on all this technology and complicated calculations and processing in order to beat the game, and none of it turns out to be as effective as sitting still and doing nothing. Awesome.

BTW, final score, 21-Zip.

Â

Edit:Â Here’s a YouTube video of an entire perfect game from that spot.

September 5, 2009 No Comments

Target Acquired.

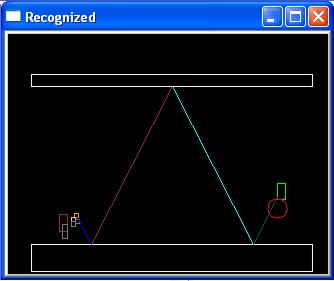

Got the first cut at the target point calculation running. Red circles and lines flying all around! It looks like something out of WarGames. When it runs, it ends up looking like some kind of frantic shooter game that might be fun to play, if you could only figure out what the hell was going on…

Right. Now to kill the jitters.

September 5, 2009 No Comments

Looking to the Future

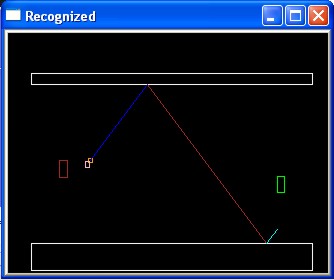

Had a bit of a problem with the bounce on the trajectory projection. First, there were infinite slopes getting in the works and gumming everything up. An infinite slope is a vertical line, and vertical lines don’t cross the paddle plane, and when the trajectory projection doesn’t intersect the paddle plane, you end up with a Forward Intersection Point list with about three million points in it, as the ball is predicted to be happily bouncing up and down over and over and over until the end of RAM. After that, I had a bug where I wasn’t using the last calculated bounce point as the seed for the next bounce point. This led to an angular infinite loop, bouncing between points 1 and 2 forever.

But those are both gone, and what I have now is a real-life forward trajectory projection! It’s really exciting, because it typically completely fails to predict where the ball is actually going to go until the last second!

Here’s some video of the current status.

Two Point Slope Calculation:Â Two Point Trajectory Projection

Five Point Calculation:Â Five Point Trajectory Projection

You can see that the five point average is less hyperactive, but it’s still not good enough. Hopefully the endpoint averaging will solve that problem.

But that will have to wait. This is where I’ll leave it for the night. Not bad for starting with nothing this morning… Four days remain.

September 5, 2009 No Comments

Scratch 2, 1 Remaining, 1 New.

The “negative slope bug” was caused by accidentally reversing X and Y in a statement. Fixing that cleared up the problem with the oddly reversing lines. Of course, a positive slope should have gotten just as screwed by the problem. Whatever. It works now.

I also found a mitigation for the duplicate paddle contours causing a jittery trajectory. I changed a parameter to cvFindContours to use the External contour retrieval mode, rather than List. I think the duplicate contours weren’t really duplicates at all, but were actually inner and outer contours around the object. That is, in certain frames, the paddle looked like a donut to the algorithm, rather than a solid block, so I was getting concentric contours representing the inside and outside of that donut. External looks like it simply gives a bounding box, which is what I wanted anyway. There are still occasional jitters, but I think I can live with them. It’s running much smoother now.

That still leaves the integer extrapolation issue, which should be fairly straightforward to take care of. Just look in the history for a few more points and get a clearer trajectory.

Another bug was exposed after I fixed the two big problems. While I was working out the calculation for the intersection between the extrapolated trajectory and the boundary walls, I noted a case where there’d be a division by zero, if the slope ever happened to be zero, so I put in a guard clause against that. However, at the time, I didn’t think about the fact that a zero slope is, in fact, perfectly acceptable in Pong. If you hit the ball at the middle of your paddle, it’s bounced back straight across the playfield.

September 4, 2009 No Comments

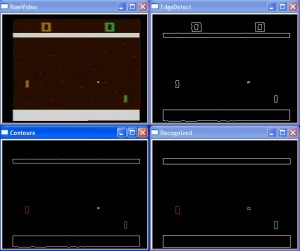

Recognize, yo!

I now have a basic recognizer working off of a video recording of the game. It’s finding the top and bottom boundaries, and most of the time, it knows where the left and right paddles and the ball are. There are still occasional glitches, like where an especially prominent piece of noise decides it wants to be the ball, or where for some reason, there are nearly overlapping contours found over one of the paddles, however, I think it’s at a point where I can proceed to the gameplay logic calculations.

I’m sure there’s some good reason that OpenCV is returning duplicate or near duplicate contours, and I’m sure there’s some known way to deal with the problem. I probably should read the book a bit more…

Nah, the book’s only for when I get completely stuck.

Anyway, here’s a description of what I’ve done so far, for those of you playing at home:

- Pull a frame from a pre-recorded video of Pong. This frame is in the upper left video box.

- Run the frame through a Gaussian smoothing operation twice to tone down the annoying noise in the video.

- Turn the frame greyscale for the next operation.

- Run a Canny edge detector on the image. The results of this are in the upper right box.

- Feed the Canny image into a contour finder. The results, shown in the lower left, look pretty much the same as the Canny image, but the difference is that the Canny results are a regular bitmap image, while the contours are sets of vectors that are more easily manipulated.

- The contours are run through in order to find the playfield elements. The assumptions used to find the playfield elements are as follows:

- Anything greater than 70% of the width of the screen is either the top boundary or the bottom boundary. If it’s above the middle of the screen, it’s the top, if it’s below the middle, it’s the bottom.

- The ball and paddles are between the top and bottom boundaries. This lets me throw out the score.

- While I’m at it, throw out any contours that are really dark or black. They’re probably just noise.

- Sort what’s left by area, descending.

- The two biggest things are the left and right paddles. Whichever one is farthest left is the left paddle.

- The third biggest thing is the ball.

- Everything else is noise.

- I package up the recognized playfield elements into a nice class containing a bunch of rectangles. These rectangles are drawn in the window on the lower right.

Going forward, I’ll keep a list of the recognized playfield elements from the last X frames and use them to calculate the position and trajectory of the ball. Since I know where the ball is across multiple frames and where the boundaries are, I can extrapolate where the paddle needs to be The goal for this phase will be to get the calculations written and to display an overlay on a live game that will tell me exactly where I need to place my paddle as soon as the ball starts heading my way. Most of this is pure algebra, but there may be complications if the recognition isn’t good enough.

The code has been checked in up to this point. https://mathpirate.net/svn/

September 4, 2009 No Comments

In The Future, There Will Be Robots

So, I’m going to be building a robot that can play Pong over the next five days.

Why am I going to spend five days building a robot that can play Pong?

Well, first of all, it’ll be totally cool to have a Pong playing robot. You simply cannot deny the awesome factor of that.

Second, it will be fun to do. I’ve wanted to learn about computer vision for a while, and I’ve wanted to tinker with robotics for a while, and I love video games. So why not combine all of those into one big crazy project?

And finally, ATARI PLAYING ROBOT! Do you really need any other reason?

I chose Pong as the first game to attempt because it has uncomplicated graphics and clearly defined rules that can be described using fairly simple mathematical models. The ball will remain in motion at a constant speed in a straight line until it hits something else. (Newton’s First Law of Motion, hence all that “Newtonian Motion Simulator” nonsense earlier.) There’s no need for any kind of pathfinder or decision making AI or similar kind of complicated logic for playing the game.  If I can detect the position of the ball in two subsequent frames, and I know where the walls are, then I can predict exactly where my paddle needs to be placed in order to hit the ball back. Computers are very good at calculating that sort of thing. Pong is also a paddle controlled game, which means the robot only needs to be able to rotate clockwise or counterclockwise. No complicated multi-axis positioning that a joystick would require.

(It should be noted that the fundamentals needed to play Pong are the same things that are needed to play many other games. If you get Pong, then you get Breakout almost for free, because Breakout is Pong rotated 90 degrees. Similar to Breakout is the frantic bomb-catching game of Kaboom!. And, although they’re visually very different and utilize very different controls, games like Rock Band or Guitar Hero are essentially variants of Kaboom!. Which means that I can go from building something that can play Pong and have a clear path to a 5-star performance on Green Grass and High Tides.)

I’ve seen other people make game-playing contraptions, but none of them have tried actually watching the screen and reacting to what’s there. I’ve seen a Wii Bowling Robot that can bowl a perfect game, but it’s taking advantage of fact that if you roll perfectly straight, you get a strike. I’ve seen several Rock Band/GH bots, but they’re hacked controllers that play back a recording. And I’ve seen a program that can play a kick-ass game of Super Mario World, but it’s an AI built directly into the game code. I’ve never seen anyone who has made a robot that uses a camera to play the game using the normal TV display and normal controllers. That’s my goal here. To build a robot that can play games on a completely unmodified Atari 2600.

For the robot, I’m going to use Lego Mindstorms. That seemed like the easiest way to go, especially since I have no electrical engineering experience. If this goes well, I might step it up a notch and try a raw microprocessor and motors and risk of electric shock and fire type robot, but for now, the Legos seem good. I bought the kit about a year ago and it’s been hanging around on a shelf ever since then. It was actually around that time that I first started thinking of building an Atari controlling robot.

Of course, all of this plan is extremely difficult if I can’t see the game. In order to do that, I plan to use OpenCV. It’s an open source computer vision library (with an available .Net binding), that seems to have all manner of image processing and recognition functionality in a package that looks disturbingly easy to use. Whether or not it is, I’ll find out soon enough. It’s the same software that’s used in some of those DARPA challenge rally cars that drive themselves across the desert, so I figure it oughta be able to distinguish a couple of big blocky rectangles moving around on a TV screen. (Unless the raster scan on my CRT TV throws it off…)

So, the end-to-end plan is this: I point a camera at the TV, showing a game of Atari 2600 Pong. The camera feeds into an OpenCV based processor running on my computer. The computer calculates where the paddle needs to be in order to hit the ball, and uses Bluetooth to tell the Mindstorms robot to rotate the Atari paddle left or right. The paddle hits the ball back. Because the AI on the Video Olympics version of Pong is simple “follow” logic that doesn’t move fast enough to reach the ball in time when the ball reaches a certain speed, my robot is basically guaranteed to be able to beat the AI.

Seems possible, eh?

I plan to tackle the computer vision stuff first, then move onto the robotics.

September 3, 2009 No Comments