Achievement Unlocked: Big Truck Of Fail

That’s it. I’m calling it. This Crazy Project Weekend is over.

And it’s a big truck of fail.

The biggest problems are the motors. They just don’t do what I tell them to do. If they did, this would be a different story. But I’ve spent over three days tweaking the motors and the robotics and I just can’t get it working. Maybe I can get a Stelladaptor and try tweaking it with direct feedback. Maybe I’d be able to do continuous smooth motion if I could have tracked all the bombs properly. Maybe I just don’t know what I’m doing.

At least I was able to identify the playfield elements and get the computer to tell what the next move should be, even if I couldn’t actually get it to make that move. The basic recognition and logic was a lot easier than it was for Pong, mainly because trajectories didn’t really matter. However, I wasn’t quite able to get the bomb tracking/prediction logic working, which would have reduced the tendency for the robot to get distracted temporarily and miss a bomb. The full tracking also would have made it possible to detect patterns and move smarter. I also get the feeling that there’s something already in OpenCV that would have taken care of the object detection and motion tracking for me. That library is so big and I’m not a computer vision expert, so I don’t really know what’s there or how to use it all. The book and the documentation aren’t always enough.

And then that virus. Stupid virus. Make me waste half a day because the bloody computer stops working. THAT WAS AWESOME.

The segmented auto-calibration thing did work. I was able to adjust the robot power and swap out gears and the calibration generally figured out the new pixel/degree ratio. If the motors were more consistent, then it probably would have worked better. At any rate, that’s a decent technique that I’ll have to remember for the future. And I’ll have to clean up the code for it, right now it’s kinda messy.

In the end, I did not accomplish what I set out to do. The best score the robot ever got was 63, and that was a fluke. And I didn’t even get close to trying to get it to play on a real TV.

March 1, 2010 No Comments

Learning From Mistakes

It made it through Round 1 and got a score of 56 points. Time to take a step back and analyze what I’ve observed.

- The biggest problem is losing track of the bomb. It will be on track to catch the lowest bomb, when suddenly the detection will skip a beat and it’ll take off across the screen for something else and can’t get back fast enough to catch the bomb. I have to fix this first. I’m pretty sure I have outlier detection from the Pong game that I can reuse here.

- The segmented calibration that I described back on the first day seems to be working fairly well. That’s where the robot moves the paddle knob a known number of degrees, then the program sees how far that moved the buckets on screen, and calculates how many pixels a degree is. This lets the program know that to catch the bomb that’s 70 pixels away, it will have to rotate the paddle 30 degrees. I suspect that I will have to tweak the algorithm a bit, though. Mainly, I think it will need to do several calibration turns to refine the numbers.

- The response time is going to be a killer. I have little doubt that the motor itself can move the paddle into position fast enough. When it moves, it zips across the screen. The problem is that it doesn’t get moving as fast as it needs to and there’s an unacceptable lag between commands. I think I might still be seeing an echo of the waggle. It’s not as visible, due to the weight on the spinner assembly now, but I think that delay is still there. If I can fix some of the other issues, I suspect that the response time is going to prevent the robot from getting past around level 3.

- The NXT has an inactivity timer. The robot turned itself off in the middle of a game. Gotta take care of that…

- I don’t want to keep hitting the button to start a round. I’m going to have to implement the button press. Unfortunately, implementing the button press means I’ll have to change around how the PaddleController class works. It’s a dirty hack right now, I gotta clean that up.

- I like that Kaboom! is a much faster game cycle than Pong. With Pong, I couldn’t always tell what was wrong right away. Sometimes it took a minute or two to get the ball in a situation where something went wrong. I’d implement a fix and try again, and again, it would take a minute or two. Kaboom! doesn’t last that long. The games at this point are less than a minute long, sometimes much less. If the robot is going to lose, it’s going to lose fast. It’s not going to end up in a cycle where the ball keeps bouncing around between the same two points over and over and over for five minutes. If a game in Kaboom! lasts five minutes, then it’s an amazing success.

February 28, 2010 No Comments

56 Points!

February 28, 2010 No Comments

Round 1 Success!

February 28, 2010 No Comments

200% Improvement!

Doubled its score in just a few minutes. If I can keep on this rate of improvement, it’ll be awesome in no time!

February 28, 2010 No Comments

7 > 0

February 28, 2010 No Comments

That Would Have Been Good To Know…

There’s a function called cvCloneImage which, well, clones an image. That would have been helpful to know about SIX MONTHS AGO.

February 28, 2010 No Comments

Guidance System Video

Here’s a video of the game processing.

[mediaplayer src=’/log/wp-content/uploads/2010/02/KaboomDirections2.wmv’ ]

I don’t think it’s quite as fascinating to watch as the laser line and target circle trajectory projections of Pong, but I am happier that the game is much cleaner to work with.

This was a recording of the game processing a previously recorded play session. It’s not a recording of the robot playing, nor is it a recording of me playing with the augmented display.

If you notice, I’m measuring distance from the edge of the bucket, not the center. I’m hoping that will help alleviate some of the overrun effect. It will have the entire bucket distance available as a buffer.

February 28, 2010 No Comments

Calculating ROI

I’ve managed to cut the processing time from 160 ms, down to 70 ms, and there’s still room for improvement. I did it by using something called an ROI, or “Region of Interest”.

Pretty much every image operation in OpenCV can be restricted to the ROI of an image. It’s a rectangular mask, specifying the region that you’re interested in. If you want to blur out a box in an image, you set the ROI on the image, call the blur function, then unset the ROI, and you’ll have a nice blurred box in your image. You can also use the ROI to tell OpenCV to only pay attention to a specific area of an image when it’s doing some kind of CPU intensive processing, which is what I did.

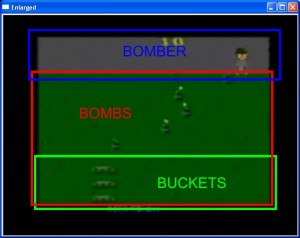

Look at the Kaboom! playfield for a moment. What do you see?

For starters, there’s that big black border around the edge. I don’t need to process any of that at all for any reason. Any cycles spent dealing with that are a complete waste of time.

Then, each item I want to recognize lives in a particular part of the screen. The bombs are only in the green area, the buckets live in the bottom third of the green zone, and the bomber lives up in the grey skies.

So why should I look around the entire screen for the buckets, when I know that they’re going to live in a strip at the bottom of the green area? That’s where the ROI comes in.

I can tell the processor to only look within specific areas for a given object. There will be no matches outside of that zone. If a bucket ever ends up in the sky, it means my Atari is broken and I will be too sad to continue. If it thinks that one of the bombs looks like the bomber, then it’s a false hit that I don’t want to know about. By restricting the ROI on the detection, I’ve seen a significant boost in performance.

And that 70ms is what I got for using large ROIs, ones considerably larger than shown in the image above. If I limit the processing to those areas, I expect to be able to knock it down to around 40-45 ms, maybe even less.

Of course, in order to do that, I’d rather not hard code pixel dimensions. I’m going to have to find the grass and the sky automatically.

February 28, 2010 No Comments

I HAZ A BUKKIT

February 27, 2010 No Comments