Guidance System Video

Here’s a video of the game processing.

[mediaplayer src=’/log/wp-content/uploads/2010/02/KaboomDirections2.wmv’ ]

I don’t think it’s quite as fascinating to watch as the laser line and target circle trajectory projections of Pong, but I am happier that the game is much cleaner to work with.

This was a recording of the game processing a previously recorded play session. It’s not a recording of the robot playing, nor is it a recording of me playing with the augmented display.

If you notice, I’m measuring distance from the edge of the bucket, not the center. I’m hoping that will help alleviate some of the overrun effect. It will have the entire bucket distance available as a buffer.

February 28, 2010 No Comments

Guidance Systems Online

The computer can now tell you where to go.

Bombs that will be caught by the buckets in their current position turn green, bombs that will be missed turn red. If the buckets will catch the lowest bomb, they turn green, otherwise they’re pink and give you a pointer as to how far you need to move and in which direction. If there are no bombs detected, the buckets turn white.

February 28, 2010 No Comments

Calculating ROI

I’ve managed to cut the processing time from 160 ms, down to 70 ms, and there’s still room for improvement. I did it by using something called an ROI, or “Region of Interest”.

Pretty much every image operation in OpenCV can be restricted to the ROI of an image. It’s a rectangular mask, specifying the region that you’re interested in. If you want to blur out a box in an image, you set the ROI on the image, call the blur function, then unset the ROI, and you’ll have a nice blurred box in your image. You can also use the ROI to tell OpenCV to only pay attention to a specific area of an image when it’s doing some kind of CPU intensive processing, which is what I did.

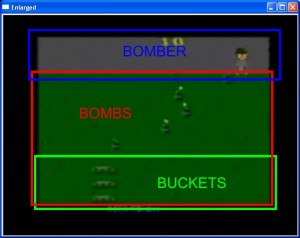

Look at the Kaboom! playfield for a moment. What do you see?

For starters, there’s that big black border around the edge. I don’t need to process any of that at all for any reason. Any cycles spent dealing with that are a complete waste of time.

Then, each item I want to recognize lives in a particular part of the screen. The bombs are only in the green area, the buckets live in the bottom third of the green zone, and the bomber lives up in the grey skies.

So why should I look around the entire screen for the buckets, when I know that they’re going to live in a strip at the bottom of the green area? That’s where the ROI comes in.

I can tell the processor to only look within specific areas for a given object. There will be no matches outside of that zone. If a bucket ever ends up in the sky, it means my Atari is broken and I will be too sad to continue. If it thinks that one of the bombs looks like the bomber, then it’s a false hit that I don’t want to know about. By restricting the ROI on the detection, I’ve seen a significant boost in performance.

And that 70ms is what I got for using large ROIs, ones considerably larger than shown in the image above. If I limit the processing to those areas, I expect to be able to knock it down to around 40-45 ms, maybe even less.

Of course, in order to do that, I’d rather not hard code pixel dimensions. I’m going to have to find the grass and the sky automatically.

February 28, 2010 No Comments

I HAZ A BUKKIT

February 27, 2010 No Comments